AI Roadtrip

Senior Producer

One day, 300 fan-inspired episodes.

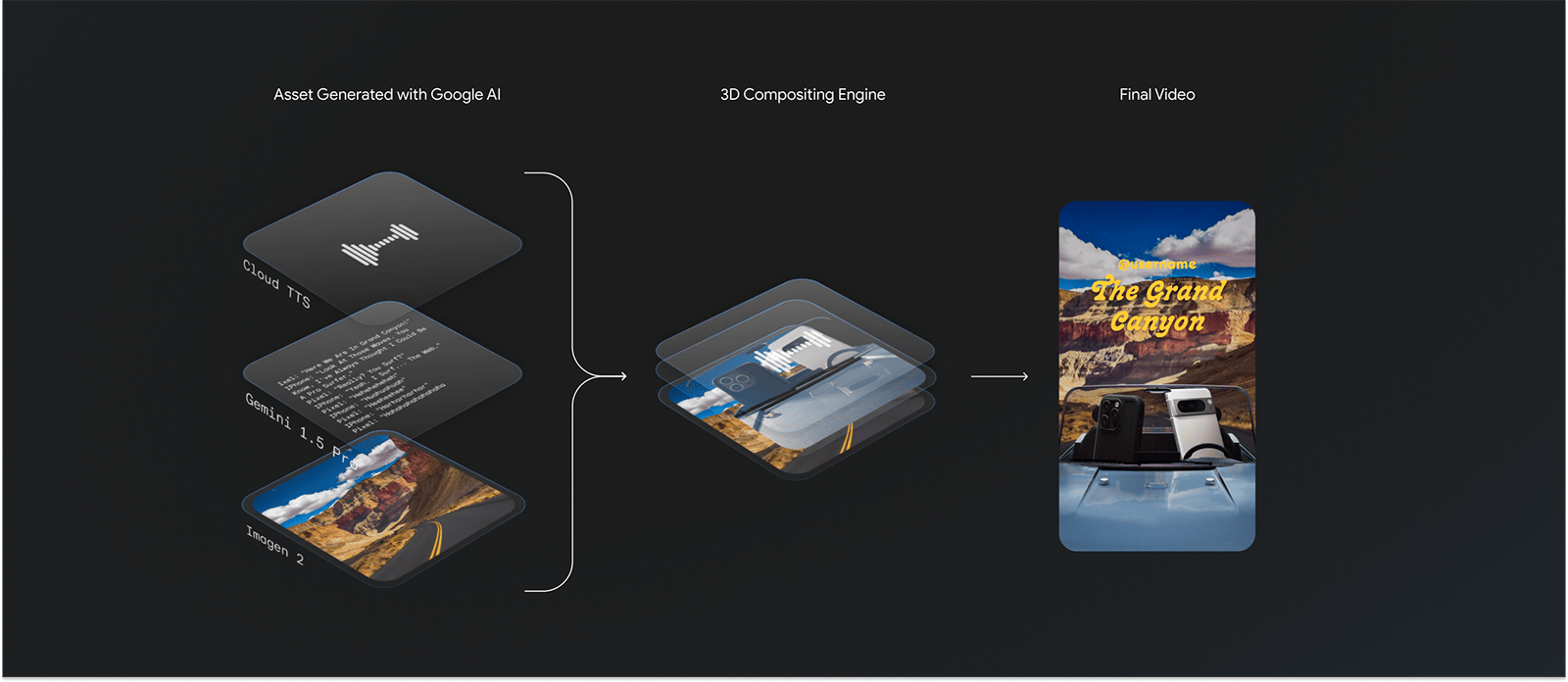

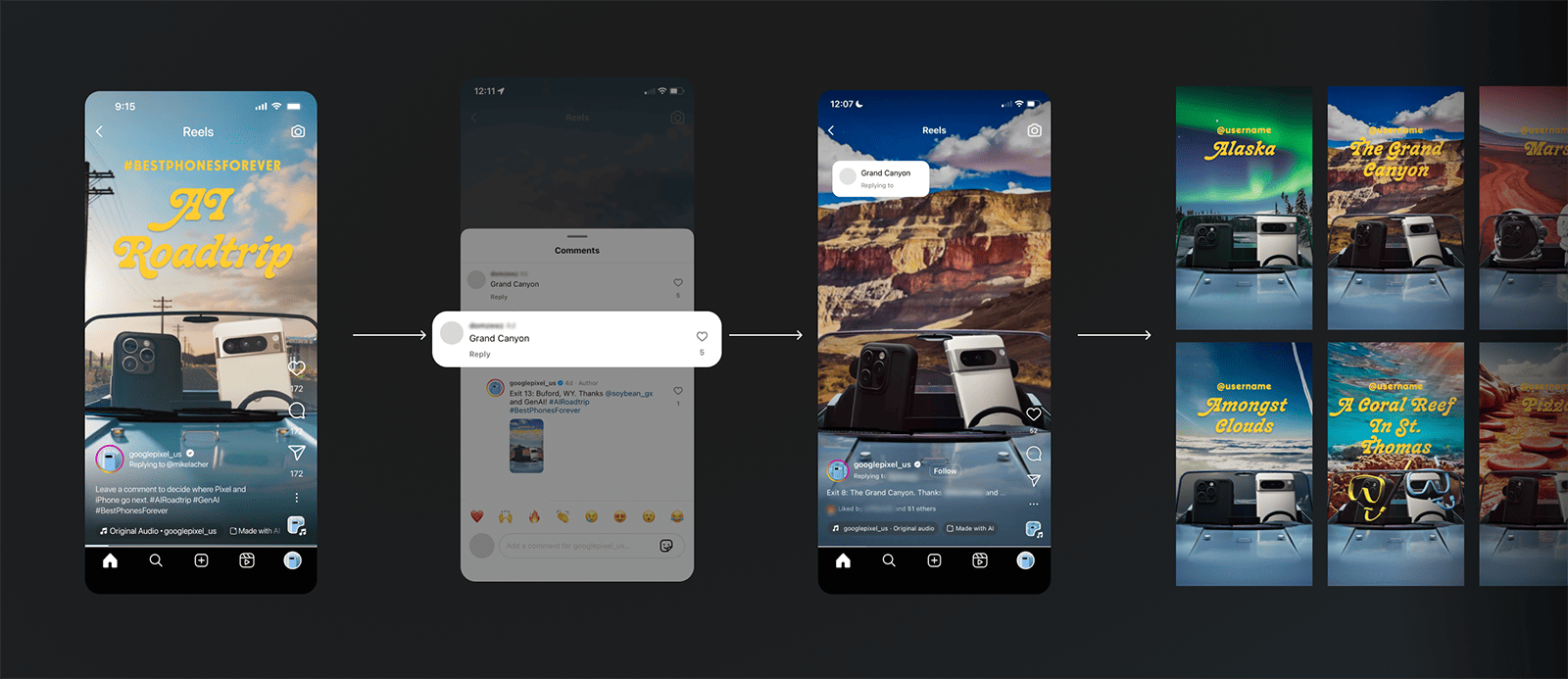

Brief: An episode on Instagram Reels explains that the two characters are going on a road trip powered by AI. When a fan comments with a location idea, our team uses a purpose-built tool to generate a custom video response within minutes. Over 16 hours, we plan to create as many unique replies as possible.

Approach: The Mill worked with Google Creative Lab to combine Google's AI tools with a custom, cloud-based render pipeline developed by The Mill that was cable of creating these films quickly at scale, all with suggestions from fans on Instagram. We used a stack of Google AI models to design a tool that balances machine efficiency with human ingenuity, and with the creative team in the loop at every step of the process. Once the initial AI assets were generated, creators could adjust camera cuts and timing, and also add 2 layers of animation for each phone. Each video could then be submitted to a bespoke, automated Unreal Engine render pipeline hosted on Google's Compute Cloud. The live campaign went for 16 hours, creating hundreds of videos with fan-suggested places, which were then posted back to Google's Instagram account with the user tagged.

Impact: Engaging directly with the fan community of #BestPhonesForever and hoping some of our takeaways inspire you to explore your own creative applications of these technologies.

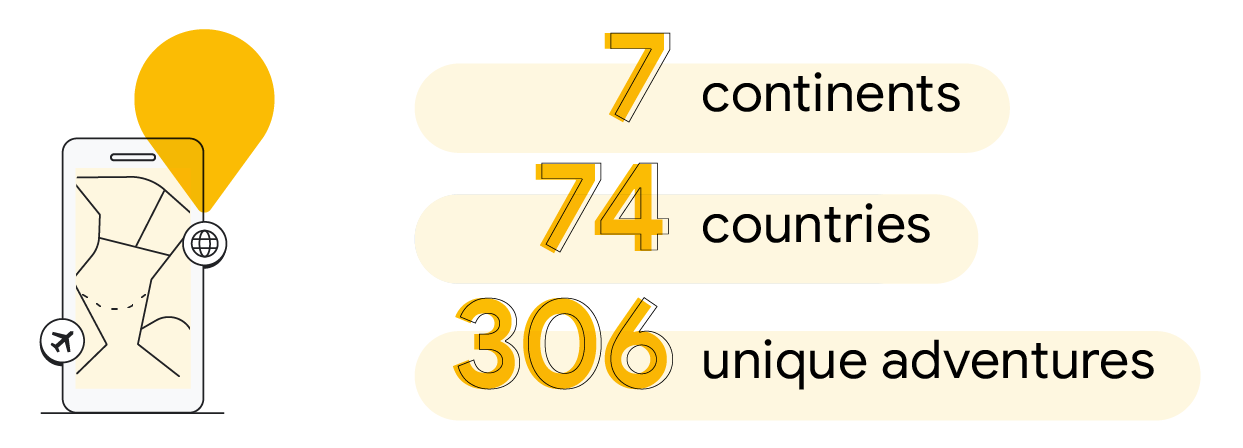

Over the course of the experiment, we sent Pixel and iPhone on 306 unique adventures, each inspired by a real user suggestion. The phones visited 74 countries across all seven continents. They crashed a wedding, explored the rings of Saturn, and ventured into imaginary realms. Ultimately, the activation gave our audience a glimpse of what’s possible with Google AI — and led to the highest-performing Google Pixel U.S. Instagram post ever.

How it Works

Non-Linear Sequence Editor

With the scene elements created, we needed to build a way for creators to adjust camera cuts and animations for each phone. We engineered the editing tool to auto-populate a timeline based on the dialog audio, meaning that a creator could theoretically click through the entire tool and still get a viable video. However, the level of control in the tool allowed for a much higher degree of customization for each video.

Automating the Unreal Engine Render Pipeline

Once the scene elements were set, and the timings refined in the sequence editor, the data was saved from the web tool and queued for our render farm to process.

The Mill developed a bespoke automation system for Unreal Engine, and a special queuing system for 30 Virtual Windows Machines (VMs) in Google's Compute Cloud.

As video jobs came available, an available (VM) would pull data from the next available video render job via its local Node server. This would in turn kick off an automated process that would open an instance of Unreal Engine and load our project template. We configured our Unreal Engine project to dynamically load in our project assets and data, and then configure animations and camera timings based on the data loaded in.

Read more about the process on Google Developer, Think With Google and The Mill.

Credits:

The Mill

Technology Director

Adam Smith

Creative Director

Michael Schaeffer

Executive Producer

Katie Tricot

Senior Producer

Dara Ó Cairbre

Producer

Michael Reiser

Art Director

Austin Marola

Developers

Joshua Tulloch, Keith Hoffmann, Jeffrey Gray, Chad Drobish, Dave Riegler

Colourist

Ashley Ayarza Woods

Partner: Left Field Labs